#Cuda block dimensions dim3 license

license that can be found in the LICENSE file.

#Cuda block dimensions dim3 code

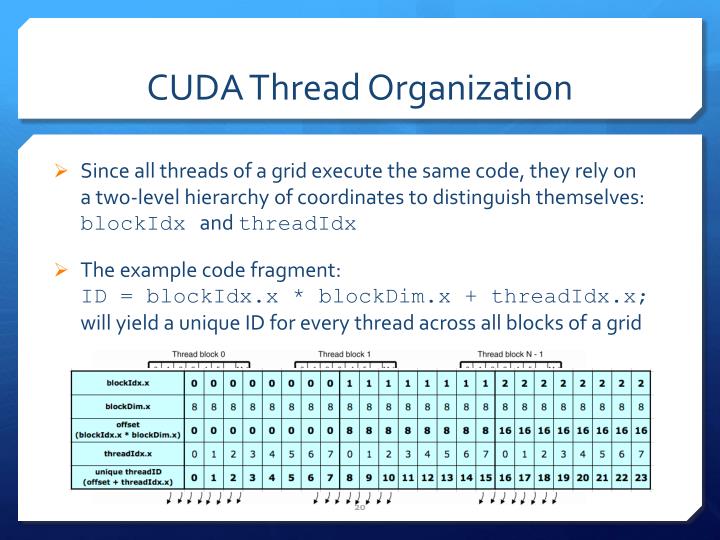

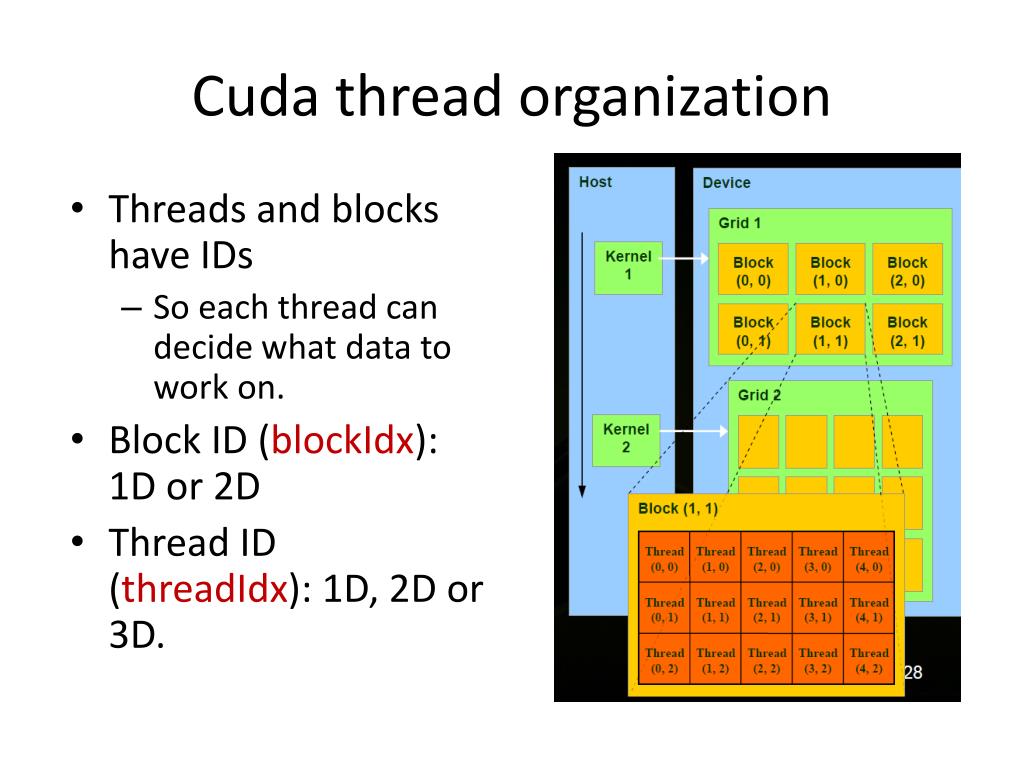

Use of this source code is governed by a BSD-style I would greatly appreciate if someone test this again for a more accurate piece of information.// Copyright (c) 2020, the YACCLAB contributors, as To my understanding it's more accurate to say: func1 is executed at least for the first 512 threads.īefore I edited this answer (back in 2010) I measured 14x8x32 threads were synchronized using _syncthreads. I just done there some cuprintf statements to check numbers of threads and it. I'm not sure about the exact number of threads that _syncthreads can synchronize, since you can create a block with more than 512 threads and let the warp handle the scheduling. dim3 block( 1024, 1024 ) // 1024 x 1024 x 1. The main point is _syncthreads is a block-wide operation and it does not synchronize all threads. func2 is executed for the remaining threads.func1 is executed for the remaining threads.func2 is executed for the first 512 threads.func1 is executed for the first 512 threads.Then the kernel must run twice and the order of execution will be: 1st was wrong, this should be the correct one: const dim3 blockSize (32,32,1) sizet gridCols (numCols + blockSize.x - 1) / blockSize.x sizet gridRows (numRows + blockSize.y - 1) /. If you execute the following with 600 threads: func1() each blocks processes 3232 pixels, and there are (totalPixels / 32) (totalPixels / 32) blocks, so you process totalPixels 2 pixels - that seems wrong. When defining a variable of type dim3, any component left unspecified is initialized to 1. dim3 is an integer vector type based on uint3 that is used to specify dimensions.

warpsize is 32 (which means each of the 14x8=112 thread-processors can schedule up to 32 threads)Ī block cannot have more active threads than 512 therefore _syncthreads can only synchronize limited number of threads. However, the access pattern depends on how you are interpreting your data and also how you are accessing them by 1D, 2D and 3D blocks of threads.Multi-dimensional block and thread indices. each SM has 8 thread-processors (AKA stream-processors, SP or cores) To learn the basic concepts involved in a simple CUDA kernel function.It only needs to add one parameter in three dimensions: dim3 block(2,2,2) That's it. Uint j = (blockIdx.y * blockDim.y) + threadIdx.y When these three parameters are one -dimensional When you need to define the two -dimensional and above grid, block and thread, you only need to use it in CUDA Cdim3Keywords, such as dim3 block(2,2) It is a thread block that defines a 2 \ Times 2. In the kernel the pixel (i,j) to be processed by a thread is calculated this way: uint i = (blockIdx.x * blockDim.x) + threadIdx.x

The threads of a block can be indentified (indexed) using 1Dimension(x), 2Dimensions (x,y) or 3Dim indexes (x,y,z) but in any case x yz >( /* params for the kernel function */ ) įinally: there will be something like "a queue of 4096 blocks", where a block is waiting to be assigned one of the multiprocessors of the GPU to get its 64 threads executed. A block is executed by a multiprocessing unit. The dimension of the thread block is accessible within the kernel. See the programming guide, section 4.3.1. CUDA architecture limits the numbers of threads per block (1024 threads per block limit). dimBlock () and dimGrid () are setting the initial values using constructors. Similarly, thread blocks can be partitioned into individual threads into 1, 2 or 3 dimensions (depending on the Compute Capability of a GPU device). If a GPU device has, for example, 4 multiprocessing units, and they can run 768 threads each: then at a given moment no more than 4*768 threads will be really running in parallel (if you planned more threads, they will be waiting their turn). SimonGreen May 30, 2008, 8:01am 2 dim3 is just a structure designed for storing block and grid dimensions.

0 kommentar(er)

0 kommentar(er)